AI Governance Maturity Assessment

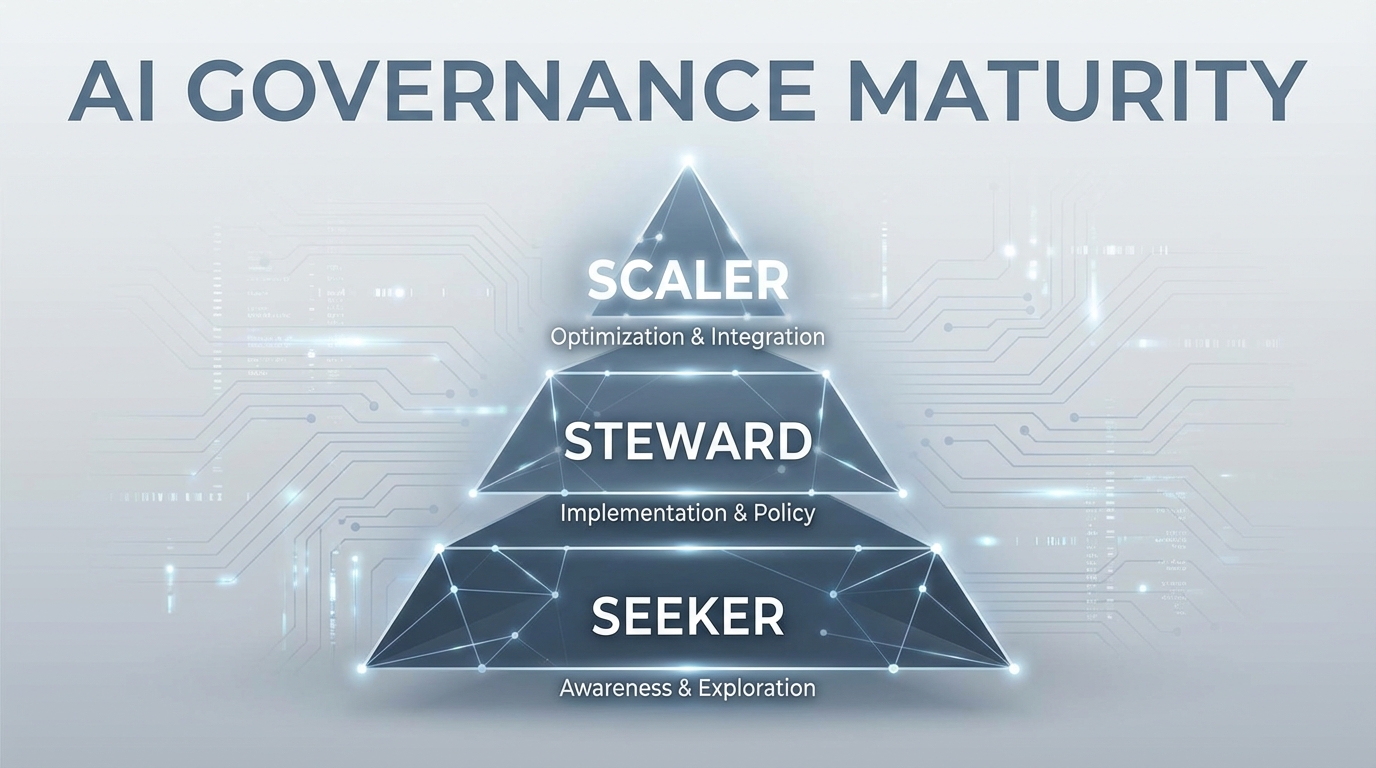

Seeker, Steward, or Scaler?

Artificial intelligence is being rapidly adopted, but with it comes significant risks. AI governance—the processes, policies, and structures that ensure responsible use—is now a necessity for every enterprise.

The Three Levels of Maturity

1. The Seeker

Seekers have limited or no formal governance. AI projects are ad hoc and experimental. While this works for pilots, the lack of guardrails often leads to costly "governance debt" as projects scale.

2. The Steward

Stewards understand the importance of ethics. They have basic policies and cross-functional teams (Legal, IT, Risk) to review projects. They use ethics checklists but still rely on manual processes that limit enterprise-wide scaling.

3. The Scaler

Scalers have mature governance hardwired into their culture. They use automated pipelines, model inventories, and standardized risk assessments (like Model Cards). For Scalers, AI strategy is integral to corporate strategy.

Advancing Your Journey

Whether you're a Seeker or a Scaler, improvement is a continuous process. Step one is assessing your current position. Try our FREE AI Governance Maturity Assessment tool today to start your roadmap.