capAI: Internal Review Protocol

Scaling Conformity Assessments for the EU AI Act

The EU AI Act categorizes systems into prohibited, high-risk, and low-risk. For high-risk systems, a complex compliance regime is required. This is where capAI becomes invaluable.

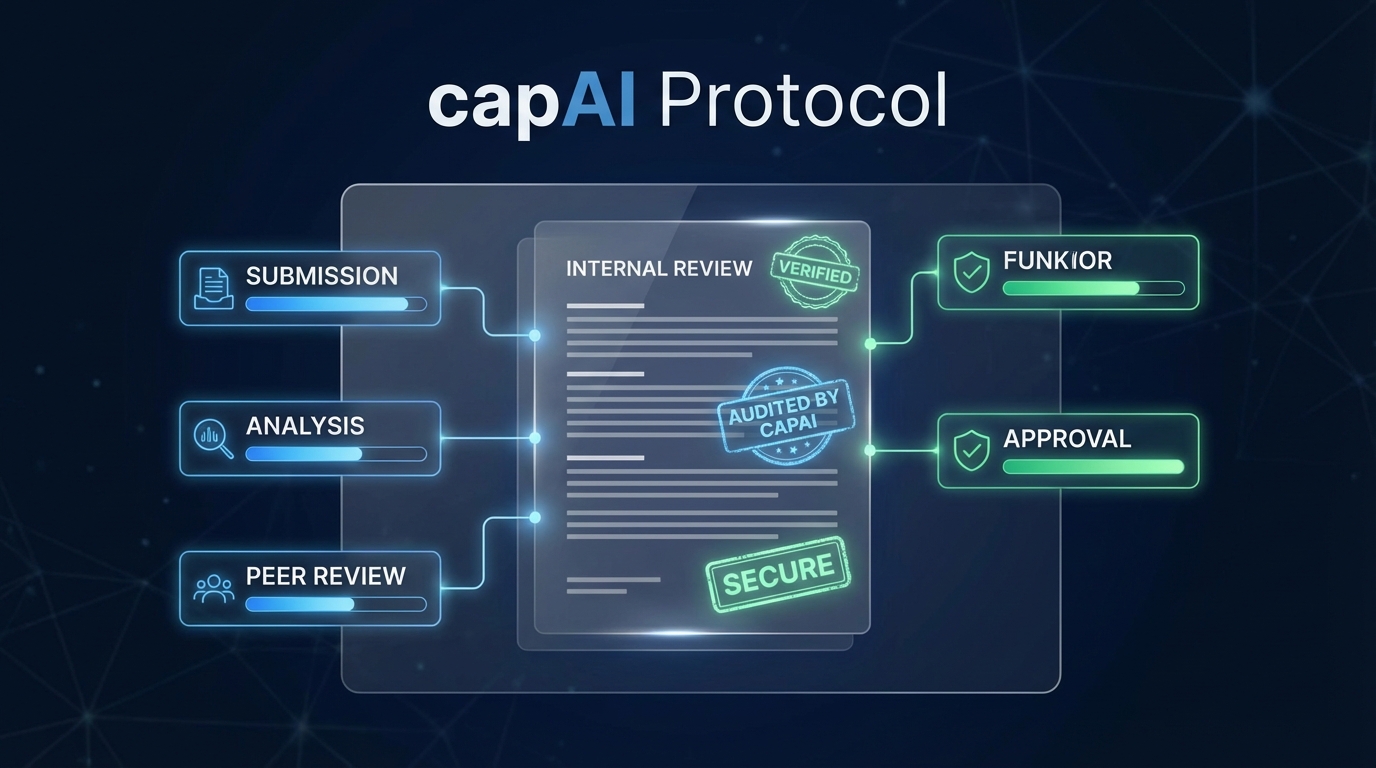

The capAI Protocol (IRP)

The Internal Review Protocol (IRP) acts as a management tool for quality assurance. It guides organizations through five critical stages:

- Design: Defining ethical values and mental models.

- Development: Technical documentation and functionality checks.

- Evaluation: Performance testing and post-launch monitoring.

- Operation: Ongoing maintenance in production.

- Retirement: Safe decommissioning of the system.

Transparency Artifacts

Beyond the internal review, capAI suggests two public-facing documents:

- Summary Datasheet (SDS): For the EU's public database, detailing the trade name and intended purpose.

- External Scorecard (ESC): A public reference documenting purpose, values, data nature, and governance for stakeholders.

Conclusion

Frameworks like capAI bridge the gap between high-level ethics and concrete best practices. By embracing these structured procedures, companies develop AI that is robust, ethical, and truly serves the public good.